When operating workloads on AWS, most organizations do not invest in building a centralized observability platform that can help correlate performance metrics in a central place. Using Amazon CloudWatch, one can leverage the embedded Observability Access Manager (OAM) feature to link multiple source accounts with a central monitoring account. Without incurring any costs, important indicators of performance bottlenecks, such as logs, metrics, and traces, can be sourced from multiple accounts into one central location.

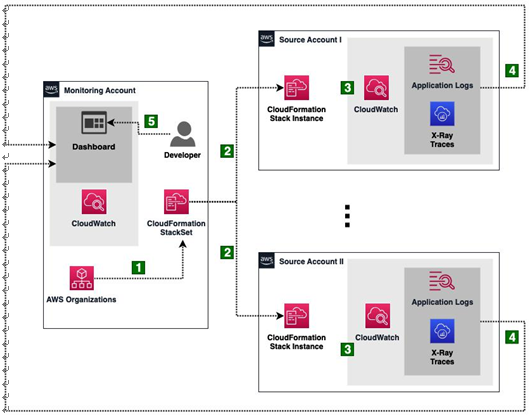

Using the AWS Organizations service, one can automatically configure the collection of observability data using CloudFormation StackSets or a similar deployment framework. Let’s see how these steps look in practice, in Figure 12.4:

Figure 12.4: Cross-account observability to observe metrics and identify performance bottlenecks

Let’s look at this in a bit more detail, as follows:

- CloudFormation StackSets, under the hood, hooks into AWS Organizations events that notify the service of any new accounts that are created in the organization.

- CloudFormation OAM requires data sharing to be configured from the source accounts. This can be easily accomplished using StackSet instances, which set up the required configuration.

- After the stack instance deployment is complete, observability data, such as logs, metrics, and X-Ray traces are automatically shared with the central monitoring account.

- Software developers, or members of the operations team, can view the aggregated metrics in the central account to understand system behaviors and identify performance bottlenecks. Once they are identified, additional measures can be taken to improve performance or right-size resources for better efficiency.

Minimizing cloud costs while maximizing business value creation

The Cost Efficiency pillar of the AWS Well-Architected Framework focuses on optimizing the use of cloud resources to achieve maximum value and thereby reduce expenses. With resource usage understanding and cost management practices, users can make informed decisions to optimize their cloud spend. There are some best practices recommended in this area, which we’ll look at next.

Best practices for reducing your cloud spend

Depending on particular use cases and application needs, certain measures can result in substantial cloud spend reduction. Let’s discuss a few practices that can benefit most applications.

Aligning consumption with business needs

Not all compute resources have to be running all the time. Except for production workloads, it is generally possible to stop development and test environments completely outside working hours. Additionally, configuring low-cost resource types for these non -critical environments can result in cost savings that can be utilized for experimental or research and development work, leading to room for innovation.

Analyzing cost anomalies

Regularly review cost and billing reports to identify unexpected cost increases and investigate the reasons for sudden cost spikes. Making software teams aware of the cost impact their architectural decisions have is a good first step to begin with.

Opting for cost-effective alternatives when possible

Services such as AWS Lambda allow you to match your specific requirements and financial constraints with serverless computing resources, with a pay-as-you-go model. By billing you only for the actual usage, they eliminate the need to manage and bear the cost of dedicated server resources that might remain idle for a substantial period of time.

To optimize cloud costs, it is important to set up automated reporting and analysis of resource consumption. A common enterprise pattern to implement this is by utilizing AWS Cost and Usage Reports ( CUR). Figure 12.5 describes how one could approach collection, automated analysis, or reporting with such a solution.